▪在前一篇,我們已經介紹了效能測試的概念與類型(Load、Stress、Soak)。

前幾天的測試是驗證「對不對」,今天的效能測試則是驗證「快不快、撐不撐得住」。

▪透過CI/CD pipeline搭配Locust,我們可以把效能測試自動化,並且在每次PR/Push 時驗證系統效能是否符合預期。

▪ PR/Push 觸發

▪ 啟動 API(本機或 staging)

▪ 執行 Locust 壓測

▪ 產出 HTML 報表

▪ 上傳至 Artifacts,必要時可設定效能門檻,未達標讓 CI 直接 fail

name: Performance Tests

on:

workflow_dispatch: {}

pull_request:

branches: [ main ]

push:

branches: [ main ]

jobs:

perf-locust:

runs-on: ubuntu-latest

env:

USERS: "60"

SPAWN_RATE: "8"

DURATION: "2m"

THRESHOLD_RT_P95_MS: "200"

THRESHOLD_ERROR_RATE: "0.01"

steps:

- uses: actions/checkout@v4

- uses: actions/setup-python@v5

with:

python-version: '3.11'

# 安裝依賴(依你的專案調整)

- name: Install deps

run: |

pip install -r requirements.txt || true

pip install -r requirements-perf.txt

# 若沒有設定 TARGET_BASE_URL secret,預設打本機 API

- name: Resolve TARGET_BASE_URL

run: |

if [ -z "${{ secrets.TARGET_BASE_URL }}" ]; then

echo "TARGET_BASE_URL=http://127.0.0.1:8000" >> $GITHUB_ENV

else

echo "TARGET_BASE_URL=${{ secrets.TARGET_BASE_URL }}" >> $GITHUB_ENV

fi

# 啟動你的 API(依你的啟動指令調整)

- name: Start API (background)

if: env.TARGET_BASE_URL == 'http://127.0.0.1:8000'

run: |

uvicorn app.main:app --host 0.0.0.0 --port 8000 & echo $! > api.pid

# 等待健康檢查就緒

for i in {1..30}; do

curl -fsS http://127.0.0.1:8000/health && break || sleep 1

done

- name: Run Locust (headless)

run: make perf

- name: Upload reports

if: always()

uses: actions/upload-artifact@v4

with:

name: perf-reports

path: reports/

.PHONY: perf

perf:

@mkdir -p reports

@python -m pip install --upgrade pip >/dev/null

@pip install -r requirements-perf.txt

@locust -f tests/perf/locustfile.py \

--headless -u $${USERS:-50} -r $${SPAWN_RATE:-10} --run-time $${DURATION:-2m} \

--host $${TARGET_BASE_URL:-http://localhost:8000} \

--html reports/locust-report.html --csv reports/locust

from locust import HttpUser, task, between, events

import os

THRESHOLD_RT_P95_MS = int(os.getenv("THRESHOLD_RT_P95_MS", "200"))

THRESHOLD_ERROR_RATE = float(os.getenv("THRESHOLD_ERROR_RATE", "0.01"))

class WebsiteUser(HttpUser):

wait_time = between(1, 3)

@task(2)

def health(self):

self.client.get("/health")

@task(3)

def add_api(self):

self.client.get("/add?a=10&b=20")

@events.test_stop.add_listener

def validate_thresholds(environment, **kwargs):

stats = environment.runner.stats

total = stats.total

p95 = total.get_response_time_percentile(0.95) if total.num_requests else 0

error_rate = (total.num_failures / total.num_requests) if total.num_requests else 0.0

print(f"[THRESHOLDS] p95={p95}ms error_rate={error_rate:.4f}")

violations = []

if p95 > THRESHOLD_RT_P95_MS:

violations.append(f"P95 {p95}ms > {THRESHOLD_RT_P95_MS}ms")

if error_rate > THRESHOLD_ERROR_RATE:

violations.append(f"Error rate {error_rate:.4f} > {THRESHOLD_ERROR_RATE:.4f}")

if violations:

for v in violations:

print(f"[THRESHOLD VIOLATION] {v}")

environment.process_exit_code = 1 # 讓 CI fail

locust==2.32.1

git init

git branch -M main

git remote add origin <url> # <url> 替換成實際 repo 位置

git add .

git commit -m "add perf testing pipeline"

git push -u origin main

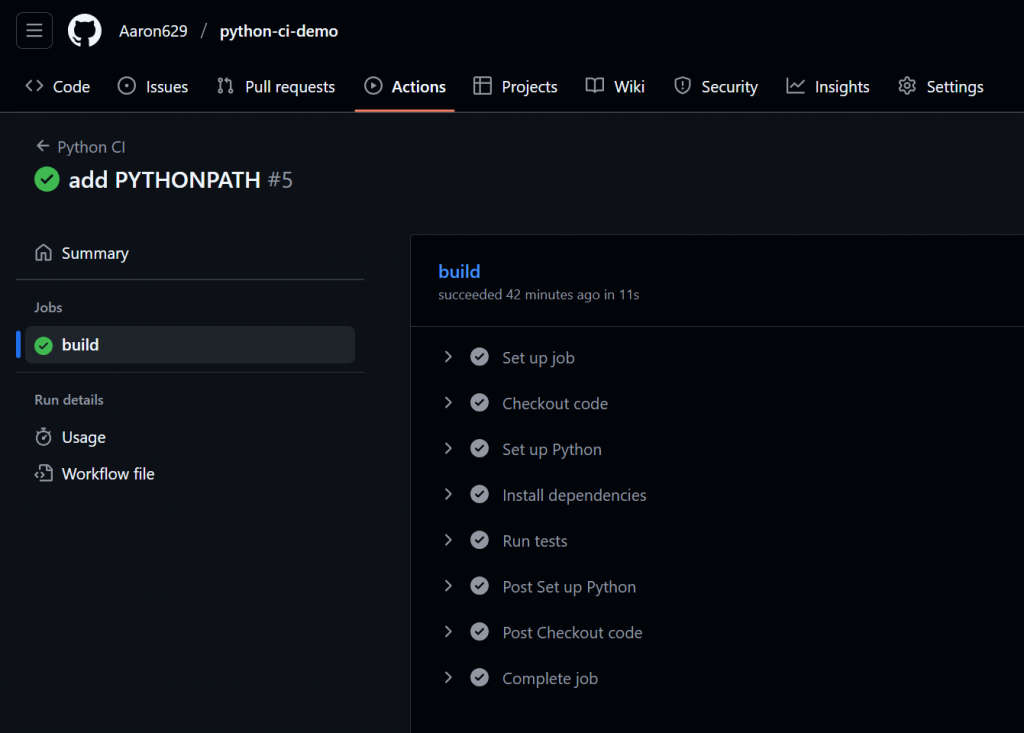

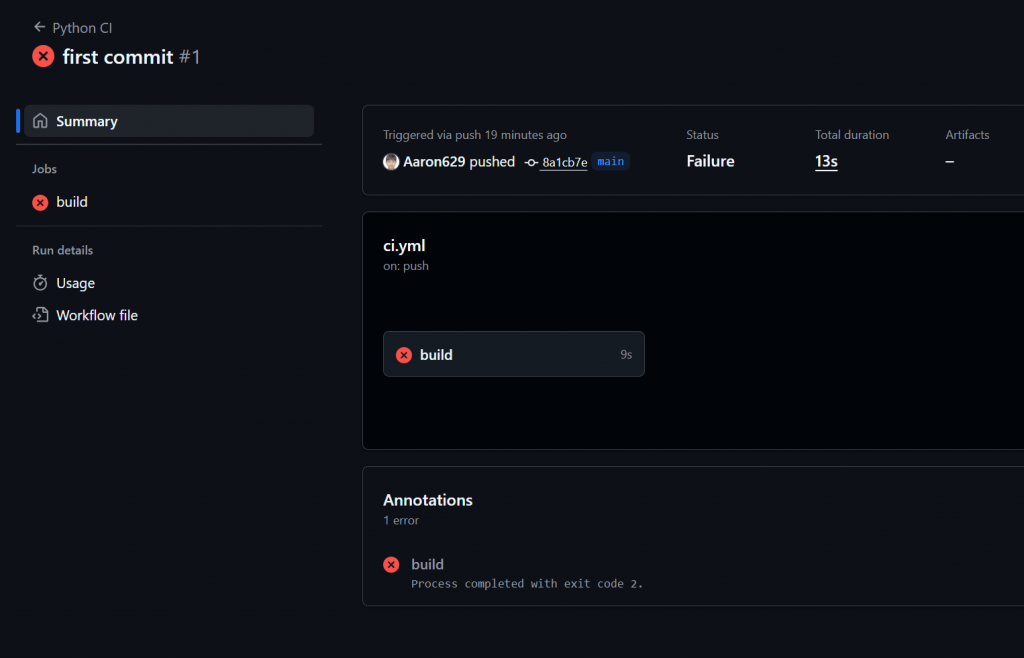

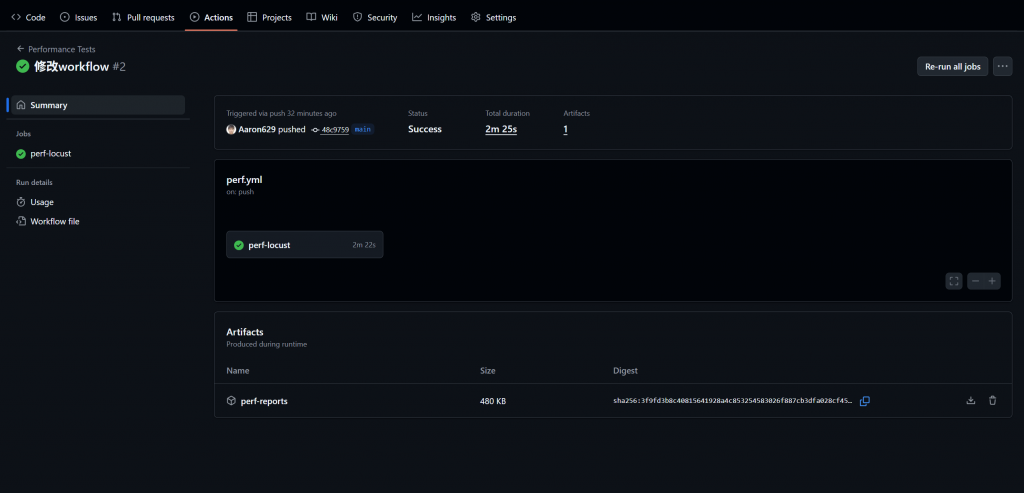

▪到GitHub Repo → Actions分頁,就能看到 pipeline 自動執行

▪成功時會顯示綠色的「✔ Passed」

▪失敗時會顯示紅色,並提供錯誤訊息幫助排查

到 Actions → Workflow Summary → Artifacts 區塊,下載 perf-reports.zip

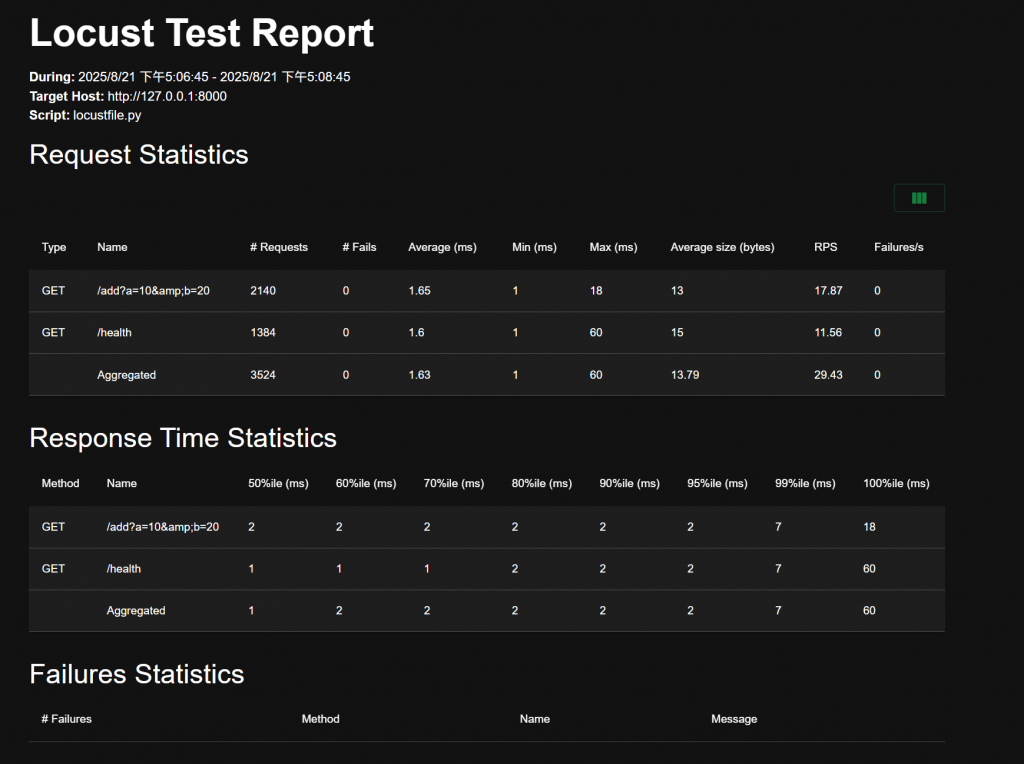

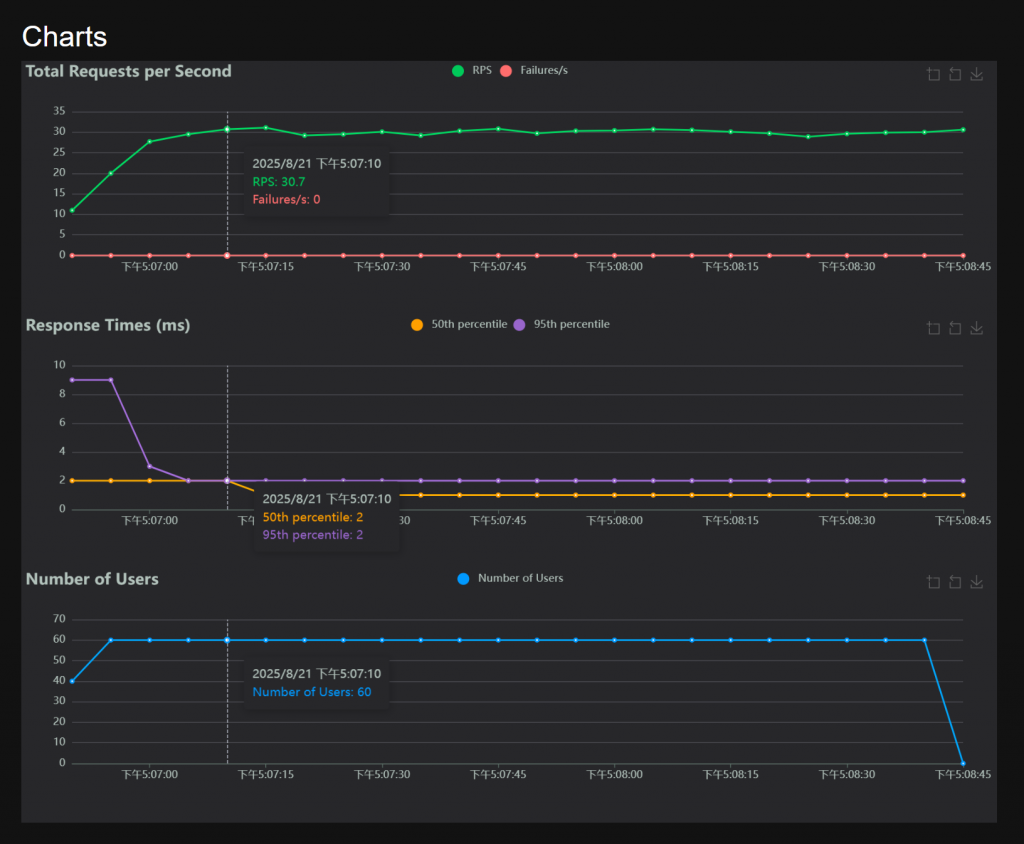

▪解壓縮後,可以看到多個CSV檔以及一份互動式 HTML報表

▪打開 locust-report.html,即可查看請求數、延遲分布、錯誤率等結果

▪透過 GitHub Actions 與 Locust,我們已經把效能測試整合進 CI/CD 流程:

▪每次 PR/Push 都會自動執行壓測

▪自動產生互動式報表,方便分析

▪若不符合設定的效能門檻,CI pipeline 會自動 fail,確保不穩定的程式碼不會進入主分支

效能測試確保了系統能撐得住,接下來我們要思考:如何把應用程式穩定且可攜式地交付出去?

下一篇👉我們將進一步探討 Docker 容器化與映像檔建立,作為部署與擴展的基礎。